October 4, 2024

How to Install a Local LLM on macOS in 10 Minutes (and use it in NeoVim)

Today, I'm going to show you how to install a local LLM on macOS in just 10 minutes. No complicated setups, no paid APIs, and no tedious installations. Plus, as a bonus, I'll show you how to use it directly in your code editor, NeoVim.

As a developer, I learn various programming languages, and honestly, having an assistant like ChatGPT can save a ton of time. Instead of spending hours digging through documentation, you get a clear, context-aware answer in just seconds.

But here's the thing: what if you could avoid using the browser and ask questions directly from NeoVim? You know, to stay in the flow. Sure, there are plugins for that… but most of them require an OpenAI API key, which isn't free. Additionally, using OpenAPI means that our data is shared with the servers... along with the slowdown caused by data transfer.

That's when I started wondering: is there an offline solution? Something that doesn't involve compiling complex models or installing dozens of Python dependencies… something simple and fast. Well, I found an amazing solution, and I'm going to share it with you!

Introducing the Model: Codestral by MistralAI

The model we're talking about today is called Codestral, developed by MistralAI. You might have heard of them; they're making waves in the language model space, especially for their ability to run high-performance models on relatively modest machines.

A few months ago, they released Codestral, a 22-billion-parameter model specifically optimized for coding tasks and capable of understanding dozens of programming languages. And guess what? I tested it, and the installation took me less than 10 minutes, start to finish.

The Key Tool: Ollama

The magic behind this quick installation is a fantastic tool I discovered called Ollama. It's kind of like "Docker" for AI models, but way easier to use. If you're on macOS, you can install it via Homebrew with a single command.

brew install ollama

Once Ollama is installed, downloading the Codestral model is as simple as running a command. Now, it's a 12 GB download, but once it's on your machine, everything runs locally via a REST API that's compatible with OpenAI's API.

ollama serve

ollama pull codestral

If you're looking for models suited to more general tasks, there are also alternatives like Gemma2 (27B). You'll have plenty of options to choose from!

ollama pull gemma2:27b

You can now start prompting a model with a simple command from the terminal.

ollama run codestral

Integrating with NeoVim: ollama.nvim

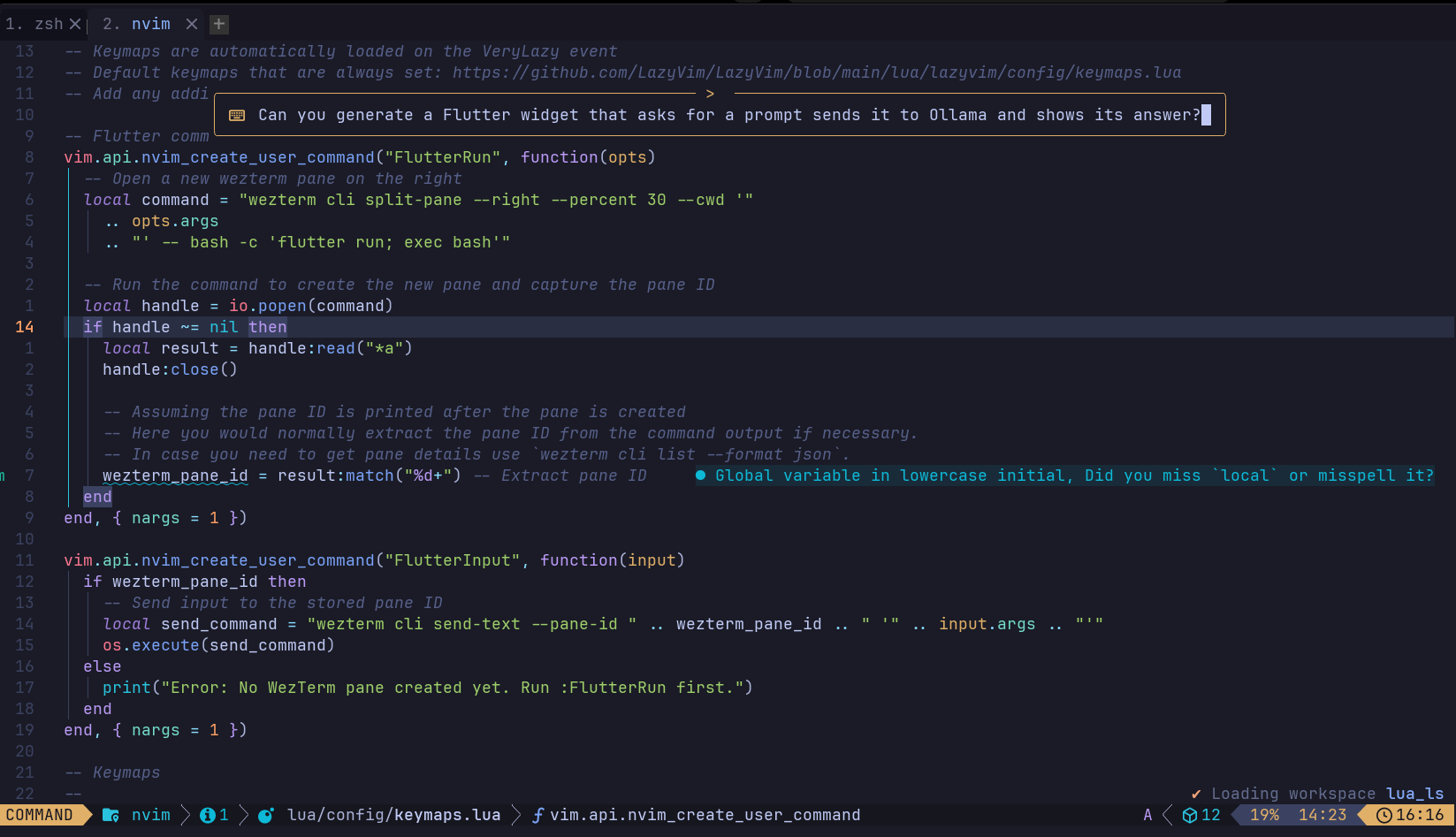

All of this is great, but I really wanted to integrate it directly into my code editor, NeoVim. Fortunately, there's a dedicated plugin called ollama.nvim.

Installing it is super simple. Just add the plugin to your configuration file. Then, in the settings, you set Codestral as the default model. You can even configure different prompts for different use cases. For instance, I've set one prompt for Codestral (for coding) and another for Gemma for more general queries. I also added some keyboard shortcuts for quick interactions with the models. For example, I use <leader>mm for Codestral and <leader>mg for Gemma.

{

"nomnivore/ollama.nvim",

dependencies = {

"nvim-lua/plenary.nvim",

},

cmd = { "Ollama" },

keys = {

{

"<leader>mm",

":<c-u>lua require('ollama').prompt('Raw')<cr>",

desc = "ollama promt",

mode = { "n", "v" },

},

{

"<leader>mc",

":<c-u>lua require('ollama').prompt('General')<cr>",

desc = "ollama prompt for a general question",

mode = { "n", "v" },

},

},

opts = {

model = "codestral",

prompts = {

General = {

prompt = "$input",

input_label = "> ",

model = "gemma2:27b",

action = "display",

},

},

},

},

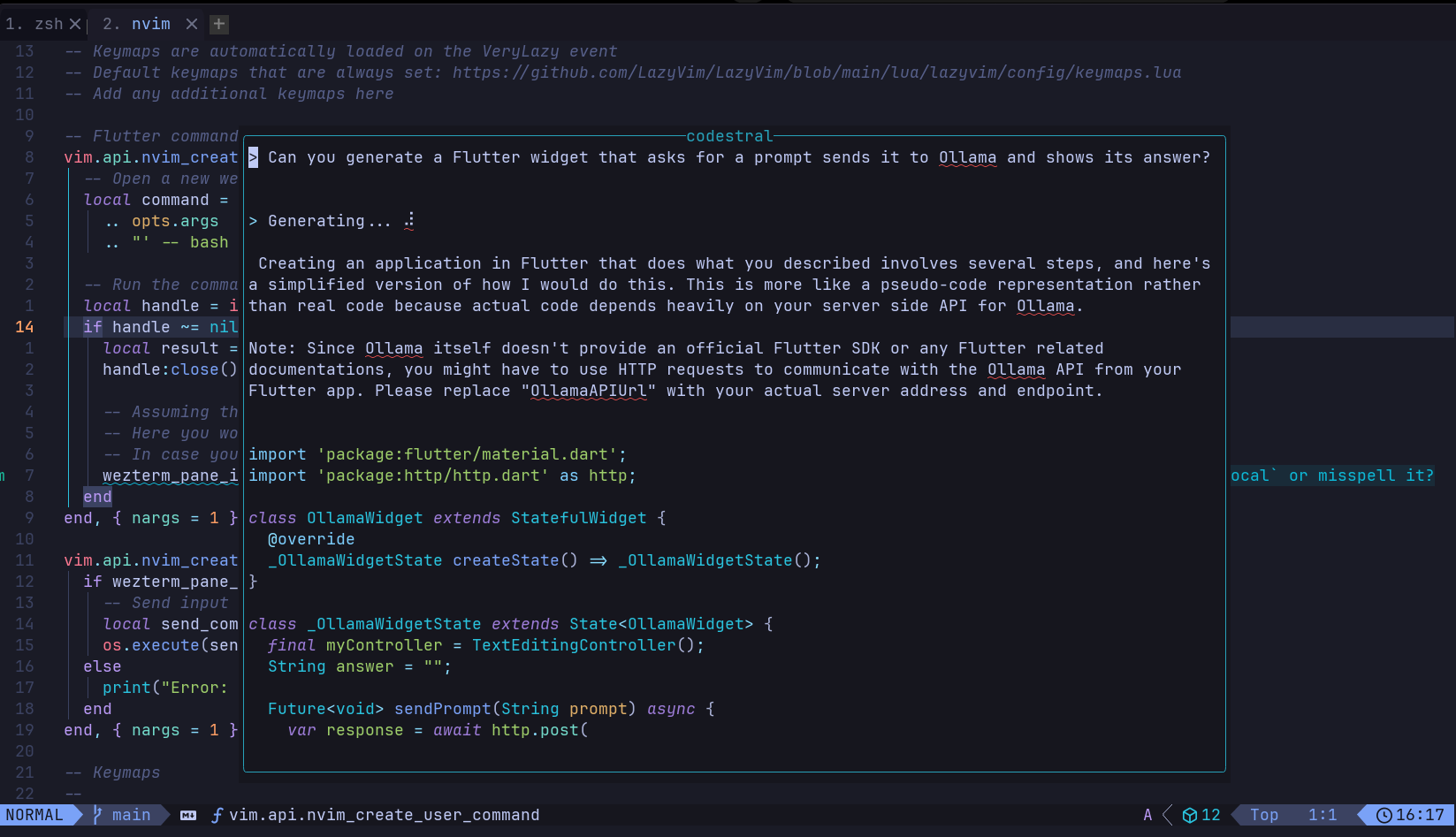

Once everything is set up, you just use the shortcut, type your question, and bam! The answer pops up right inside NeoVim, no need to switch windows.

Conclusion

Honestly, I highly recommend giving this solution a try. Whether you're working on coding projects or have other needs, having a local AI model is a game-changer. And the fact that it's all accessible through a local REST API? It's perfect if you want to automate tasks or build tools around it.